Sounds reasonable. How many players was that with? Is that up on the forum somewhere?

I had it in a local spreadsheet where I was tracking tournament results (back when I was running the events). I didn’t have enough data to share, so it was just for my edification.

EricF’s old player rankings for the 2016 CASS series, which is longer ago than I’ve currently recorded, can be found here, if people want to compare.

I hadn’t taken a close look at your most recent post, @charnel_mouse, but it’s really awesome to see this project’s progress. This is a great contribution to the community

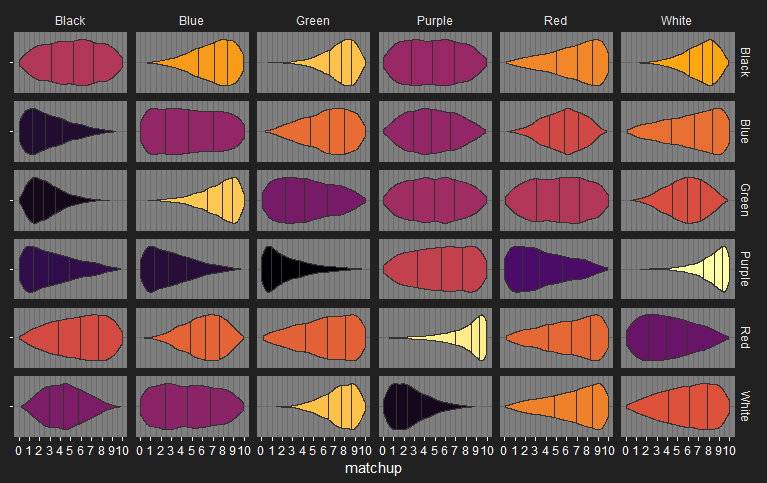

I wonder if there’d be any interest in a “peer review” games series, where we take the ten “least fair” matchups you listed in here, and try out playing ten games of just that matchup with a few different players at the helm of each. I agree with @Persephone that some of those matchups don’t jump out at me as intuitively lopsided.

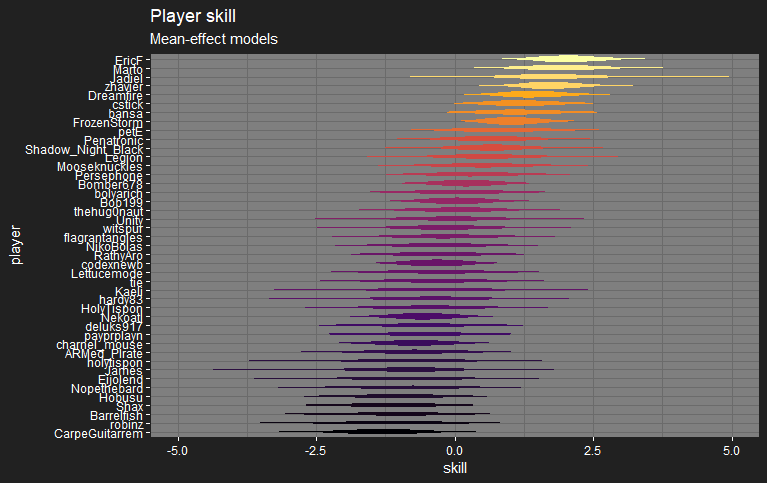

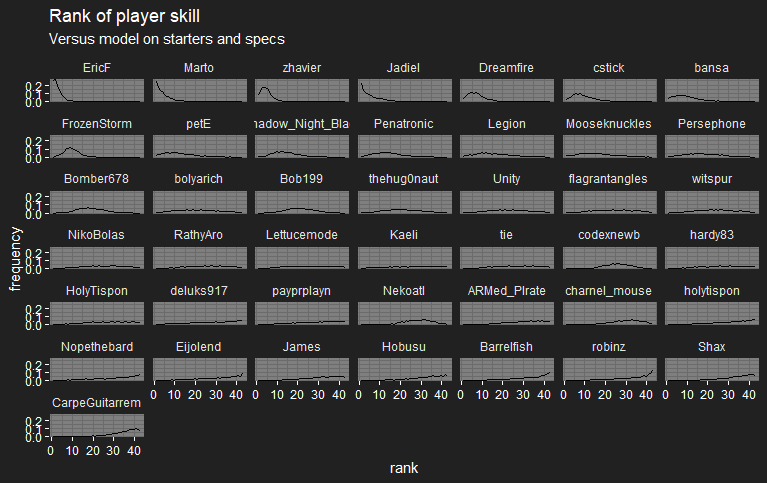

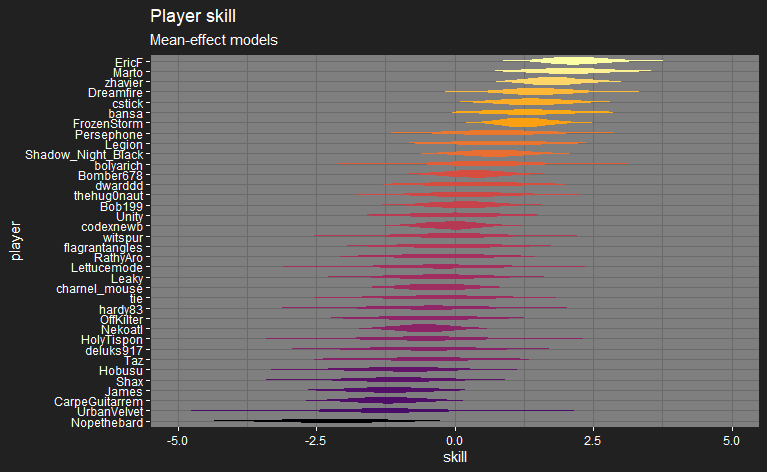

I will say, I think your player skill model is honing in pretty well. Seems like a decent rank order w/ according confidence intervals.

Thanks, @FrozenStorm! Yeah, some of those matchups look far too lopsided, don’t they? I’m a bit happier with their general directions now, though.

I’d be pretty happy if there was some sort of “peer review” series. Sort of a targeted MMM? I’d be up for running / helping out with that.

I had been vaguely thinking of a setup where I take some willing players and give them decks that the model thinks would result in an even matchup, or matchups the model was least certain about. However, I was thinking of doing this over all legal decks, not just monocolour. In the model’s current state, this would probably result in so many lopsided matches that I didn’t think it would be fair to the players.

A quick update on the changes after CAMS19.

bansa jumps up the board after coming second with a Blue starter. Persephone enters on the higher end of the board after a strong performance with MonoGreen (I have no match data yet from before her hiatus).

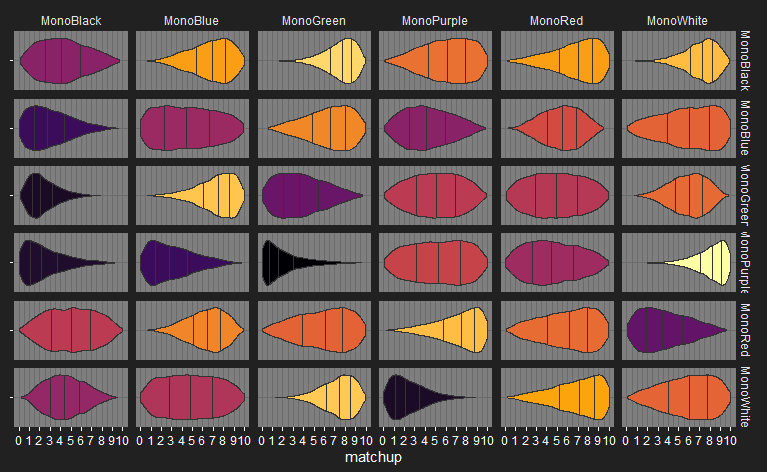

Monocolour matchup details

| P1 deck | P2 deck | P1 win probability | matchup | fairness | |

|---|---|---|---|---|---|

| 1 | Green | Purple | 0.493 | 4.9-5.1 | 0.99 |

| 2 | Black | Black | 0.519 | 5.2-4.8 | 0.96 |

| 3 | Black | Purple | 0.481 | 4.8-5.2 | 0.96 |

| 4 | Green | Red | 0.519 | 5.2-4.8 | 0.96 |

| 5 | Blue | Blue | 0.473 | 4.7-5.3 | 0.95 |

| 6 | Blue | Purple | 0.473 | 4.7-5.3 | 0.95 |

| 7 | White | Blue | 0.461 | 4.6-5.4 | 0.92 |

| 8 | Purple | Purple | 0.548 | 5.5-4.5 | 0.90 |

| 9 | White | Black | 0.439 | 4.4-5.6 | 0.88 |

| 10 | Blue | Red | 0.569 | 5.7-4.3 | 0.86 |

| 11 | Green | Green | 0.431 | 4.3-5.7 | 0.86 |

| 12 | Red | Black | 0.572 | 5.7-4.3 | 0.86 |

| 13 | Green | White | 0.581 | 5.8-4.2 | 0.84 |

| 14 | Red | White | 0.409 | 4.1-5.9 | 0.82 |

| 15 | White | White | 0.591 | 5.9-4.1 | 0.82 |

| 16 | Red | Green | 0.610 | 6.1-3.9 | 0.78 |

| 17 | Red | Blue | 0.615 | 6.1-3.9 | 0.77 |

| 18 | Red | Red | 0.620 | 6.2-3.8 | 0.76 |

| 19 | Blue | Green | 0.627 | 6.3-3.7 | 0.75 |

| 20 | Blue | White | 0.630 | 6.3-3.7 | 0.74 |

| 21 | Purple | Red | 0.367 | 3.7-6.3 | 0.73 |

| 22 | White | Red | 0.655 | 6.6-3.4 | 0.69 |

| 23 | Black | Red | 0.663 | 6.6-3.4 | 0.67 |

| 24 | Purple | Black | 0.323 | 3.2-6.8 | 0.65 |

| 25 | Black | Blue | 0.693 | 6.9-3.1 | 0.61 |

| 26 | Purple | Blue | 0.300 | 3.0-7.0 | 0.60 |

| 27 | Black | White | 0.709 | 7.1-2.9 | 0.58 |

| 28 | Blue | Black | 0.289 | 2.9-7.1 | 0.58 |

| 29 | White | Purple | 0.264 | 2.6-7.4 | 0.53 |

| 30 | Green | Black | 0.262 | 2.6-7.4 | 0.52 |

| 31 | Black | Green | 0.745 | 7.5-2.5 | 0.51 |

| 32 | White | Green | 0.743 | 7.4-2.6 | 0.51 |

| 33 | Green | Blue | 0.753 | 7.5-2.5 | 0.49 |

| 34 | Purple | Green | 0.240 | 2.4-7.6 | 0.48 |

| 35 | Red | Purple | 0.801 | 8.0-2.0 | 0.40 |

| 36 | Purple | White | 0.824 | 8.2-1.8 | 0.35 |

The main difference here is that P1 MonoPurple is considered to take a beating from P2 MonoGreen (2.4-7.6). This is because the matchup didn’t have much evidence before, and CAMS19 had the following relevant matches:

- Unity [Balance/Growth]/Present vs. Legion [Anarchy]/Growth/Strength, Legion wins

- FrozenStorm [Future/Past]/Finesse vs. Unity [Balance/Growth]/Present, Unity wins

- Unity [Balance/Growth]/Present vs. Persephone MonoGreen, Persephone wins

- FrozenStorm [Future/Past]/Finesse vs. charnel_mouse [Balance]/Blood/Strength, charnel_mouse wins

All P2 wins.

Current monocolour Nash equilibrium:

P1: Pick Black or Red at 16:19.

P2: Pick Black or White at 6:1.

Value: About 5.5-4.5, slightly P1-favoured.

Slight change in overall matchup, both players favour Black much less than before.

If the turn order is unknown, then both players have the same equilibrium strategy: pick Black, all the time, since it outperforms all the other monodecks when averaged over turn order.

Next update should be for Metalize’s data.

I’m confused… these aren’t monocolor matchups, so why are they being considered for those monocolor probabilities? They indicate something about the starter choice, perhaps, but certainly not monocolor matchups…

Because the strength of a deck against another is modelled as the sum of 16 strength components:

- P1 starter vs. P2 starter (1 component, e.g. Green vs. Red)

- P1 starter vs. P2 specs (3 components, e.g. Green vs. Anarchy, Green vs. Growth, and Green vs. Strength)

- P1 specs vs. P2 starter (3 components, e.g. Balance vs. Red, Growth vs. Red, Present vs. Red)

- P2 specs vs. P2 specs (9 components I won’t list here, but which include Present vs. Growth for the first match)

For the example first match, only the Present vs. Growth component is relevant to the MonoPurple vs. MonoGreen matchup, but the second match has nine relevant components: any of Purple starter, Future spec, Past spec, vs. any of Green starter, Balance spec, Growth spec.

This allows a starter/spec’s performance in a deck to also inform its likely performance in other decks. I think this is reasonable, and if we don’t do this, the amount of information on most decks, including monodecks, is miniscule.

I originally introduced this approach here, albeit not in much detail:

I think Frozen is reasonably concerned that, without looking at the inner workings of the actual match, its a bit of a stretch to say present and growth actually faced off in any meaningful way. That said, such analysis of the inner workings of a match are well beyond the scope of this data compilation.

In a game where crashbarrows won the day, and the opponent was actively using strength, the fact that growth was an alternate option doesn’t directly mean growth is weak against crashbarrows, but taken together with player skill factors, the more skilled players will choose what they think is the best strategy vs a given deck and it will all even out in the long run.

In any event, I think the statistics are still informative.

Yeah, I can’t really evaluate whether they faced off without doing something much more complicated. The mere presence of a spec could shape the match without it ever being used, though, and the hero can affect things too. The meaning of the Present vs. Growth component is not how well those specs face off, it’s a partial evaluation of how well decks including them face off. You couldn’t just take the neutral components to evaluate the 1-spec starter game format, for example.

This was a ton of work! Thanks for attempting to do this. Also, I would be interested in testing 10-match mono-color intervals (with advice) to further strengthen match-up assertions.

Thanks! I’m going to wait and see how the results for the current tournament affect things, it should reduce the confounding between strong players and strong decks a bit. Then I’ll put up the “lopsided” matches, minus Black vs. Blue, up in a thread, and see who’s interested. If you mean advice from other players, I was thinking of suggesting some warm-up matches, à la MMM1, but not enforcing it, so that could be a good time for that sort of thing.

I’m not sure about the exact format yet. @FrozenStorm was your suggestion above to take one matchup at a time, and have different pairs of players play one game each for a total series of ten? If we do it like that, I could put up the lopsided matches and do a poll on which one to do first/next, and it’s a bit less time-demanding than two players doing ten matches.

@charnel_mouse re-reading my previous post, I think the format I had in mind was something more akin to MMM’s format, where a sign-ups sheet is posted, either to just get a pool of players to be assigned decks, or more exactly like MMM for players to sign up for specific matchups (I think I prefer the latter, as this is meant in my eyes to be an opportunity to “challenge” what’s listed as a “bad matchup” by proving it more even.)

So something like:

- You compile a list of the 5-10 most “interesting” pieces of your data (perhaps we can have a Discord call w/ some experienced players to sort out which results from the data are most surprising to us, or put up a poll)

- Players then are invited to posit a challenge to those data (i.e. you have Purple vs Green as 2-8 and I think it’s much closer to even, so I volunteer to play purple. Or perhaps your data has Blue vs Red to be 6-4 and someone thinks it’s closer to 3-7 the opposite way. I’m making those numbers up but you catch my drift)

- Players w/ at least some experience on either deck are invited to help play out a 10-game series of that (I’d bring @Shadow_Night_Black in to help out with Purple maybe, for example, and I think @Mooseknuckles has played a fair share of Green starter at least? And @EricF and @zhavier are just really strong players who have played a fair bit of Growth and could probably puzzle out great plays? ). It could be where we play in council with one another, or we just split up who plays how many games on which decks

A bit sloppy of an idea, I’ll admit, but at least a means of seeing w/ experienced players “is this data reflective of more targeted testing?”

That sounds reasonable. Would it help if I also drew up the model’s matchup predictions when the players are taken into account? Re: Discord calls, if you wanted me on the call too we’d have to work around my being on GMT.

While we’re waiting for XCAFRR19 to finish up, I thought I’d put up what the model retroactively considers to be highlights from CAMS19 – the most recent standard tournament – and MMM1. The model currently considers player skills to be about twice as important as the decks in a matchup, so the MMM1 highlights might be helpful to provide context to some of the recent monocolour deck results.

CAMS19 fairest matches

| match name | result probability | fairness |

|---|---|---|

| CAMS19 R4 FrozenStorm [Future/Past]/Finesse vs. charnel_mouse [Balance]/Blood/Strength, won by charnel_mouse | 0.50 | 0.99 |

| CAMS19 R3 bansa [Law/Peace]/Finesse vs. zhavier Miracle Grow, won by bansa | 0.51 | 0.98 |

| CAMS19 R10 bansa [Law/Peace]/Finesse vs. zhavier Miracle Grow, won by zhavier | 0.49 | 0.98 |

| CAMS19 R3 codexnewb Nightmare vs. Legion Miracle Grow, won by codexnewb | 0.53 | 0.95 |

| CAMS19 R6 Persephone MonoGreen vs. bansa [Law/Peace]/Finesse, won by bansa | 0.47 | 0.94 |

CAMS19 upsets

| match name | result probability | fairness |

|---|---|---|

| CAMS19 R2 Legion Miracle Grow vs. EricF [Anarchy/Blood]/Demonology, won by Legion | 0.39 | 0.79 |

| CAMS19 R9 EricF [Anarchy/Blood]/Demonology vs. zhavier Miracle Grow, won by zhavier | 0.46 | 0.92 |

| CAMS19 R6 Persephone MonoGreen vs. bansa [Law/Peace]/Finesse, won by bansa | 0.47 | 0.94 |

| CAMS19 R10 bansa [Law/Peace]/Finesse vs. zhavier Miracle Grow, won by zhavier | 0.49 | 0.98 |

| CAMS19 R4 FrozenStorm [Future/Past]/Finesse vs. charnel_mouse [Balance]/Blood/Strength, won by charnel_mouse | 0.50 | 0.99 |

MMM1 fairest matches

| match name | P1 win probability | fairness | observed P1 win rate |

|---|---|---|---|

| zhavier MonoGreen vs. EricF MonoWhite | 0.51 | 0.99 | 0.6 (3/5) |

| FrozenStorm MonoBlue vs. Dreamfire MonoRed | 0.49 | 0.99 | 0.6 (3/5) |

| Bob199 MonoBlack vs. FrozenStorm MonoWhite | 0.47 | 0.95 | 0.6 (3/5) |

| cstick MonoGreen vs. codexnewb MonoBlack | 0.53 | 0.94 | 0.6 (3/5) |

| codexnewb MonoBlack vs. cstick MonoGreen | 0.45 | 0.90 | 0.4 (2/5) |

I’d recommend looking at the P1 Black vs. P2 White matchup, and both Black/Green matchups, in the latest monocolour matchup plot, to see how much the players involved can change a matchup.

MMM1 unfairest matchups

| match name | P1 win probability | fairness | observed P1 win rate |

|---|---|---|---|

| HolyTispon MonoPurple vs. Dreamfire MonoBlue | 0.08 | 0.15 | 0.0 (0/5) |

| Nekoatl MonoBlue vs. FrozenStorm MonoBlack | 0.09 | 0.17 | 0.0 (0/5) |

| FrozenStorm MonoBlack vs. Nekoatl MonoBlue | 0.90 | 0.20 | 1.0 (5/5) |

| Shadow_Night_Black MonoPurple vs. Bob199 MonoWhite | 0.87 | 0.27 | 1.0 (5/5) |

| Bob199 MonoWhite vs. Shadow_Night_Black MonoPurple | 0.16 | 0.32 | 0.0 (0/5) |

| Dreamfire MonoBlue vs. HolyTispon MonoPurple | 0.80 | 0.40 | 0.8 (4/5) |

| EricF MonoWhite vs. zhavier MonoGreen | 0.78 | 0.43 | 1.0 (5/5) |

A quick update after adding the results from XCAFRR19.

The player chart is getting a little crowded with all the new players recently – hooray! – so I’ve trimmed it to only show players that were active in 2018–2019.

Monocolour matchup details

Sorted by matchup fairness.

| P1 deck | P2 deck | P1 win probability | matchup | fairness |

|---|---|---|---|---|

| Green | Purple | 0.501 | 5.0-5.0 | 1.00 |

| Red | Black | 0.503 | 5.0-5.0 | 0.99 |

| Green | Red | 0.493 | 4.9-5.1 | 0.99 |

| White | Blue | 0.484 | 4.8-5.2 | 0.97 |

| Purple | Purple | 0.522 | 5.2-4.8 | 0.96 |

| Blue | Red | 0.541 | 5.4-4.6 | 0.92 |

| Purple | Red | 0.459 | 4.6-5.4 | 0.92 |

| Blue | Blue | 0.453 | 4.5-5.5 | 0.91 |

| White | Black | 0.443 | 4.4-5.6 | 0.89 |

| Black | Black | 0.426 | 4.3-5.7 | 0.85 |

| Blue | Purple | 0.427 | 4.3-5.7 | 0.85 |

| Red | Green | 0.581 | 5.8-4.2 | 0.84 |

| Blue | White | 0.583 | 5.8-4.2 | 0.83 |

| White | White | 0.584 | 5.8-4.2 | 0.83 |

| Red | Red | 0.595 | 6.0-4.0 | 0.81 |

| Green | White | 0.593 | 5.9-4.1 | 0.81 |

| Black | Purple | 0.602 | 6.0-4.0 | 0.80 |

| Green | Green | 0.378 | 3.8-6.2 | 0.76 |

| Red | Blue | 0.631 | 6.3-3.7 | 0.74 |

| Red | White | 0.367 | 3.7-6.3 | 0.74 |

| Blue | Green | 0.635 | 6.4-3.6 | 0.73 |

| Black | Blue | 0.668 | 6.7-3.3 | 0.66 |

| Black | Red | 0.670 | 6.7-3.3 | 0.66 |

| White | Red | 0.677 | 6.8-3.2 | 0.65 |

| Purple | Blue | 0.308 | 3.1-6.9 | 0.62 |

| Blue | Black | 0.306 | 3.1-6.9 | 0.61 |

| Red | Purple | 0.712 | 7.1-2.9 | 0.58 |

| Black | White | 0.709 | 7.1-2.9 | 0.58 |

| Green | Blue | 0.721 | 7.2-2.8 | 0.56 |

| White | Green | 0.726 | 7.3-2.7 | 0.55 |

| Purple | Black | 0.253 | 2.5-7.5 | 0.51 |

| Black | Green | 0.745 | 7.5-2.5 | 0.51 |

| White | Purple | 0.241 | 2.4-7.6 | 0.48 |

| Green | Black | 0.237 | 2.4-7.6 | 0.47 |

| Purple | Green | 0.203 | 2.0-8.0 | 0.41 |

| Purple | White | 0.798 | 8.0-2.0 | 0.40 |

Some highlights from XCAFFR19. These are retrospective, i.e. after including their results in the (training) data.

Fairest XCAFFR19 matches

| match name | result probability | fairness |

|---|---|---|

| XCAFRR19 R1 FrozenStorm [Discipline]/Past/Peace vs. codexnewb [Future]/Anarchy/Peace, won by codexnewb | 0.50 | 1.00 |

| XCAFRR19 R7 Bomber678 [Feral/Growth]/Disease vs. James MonoBlack, won by James | 0.50 | 1.00 |

| XCAFRR19 R4 Nekoatl [Balance/Growth]/Disease vs. James [Disease/Necromancy]/Law, won by Nekoatl | 0.49 | 0.99 |

| XCAFRR19 R8 Nekoatl [Feral/Growth]/Disease vs. charnel_mouse [Discipline]/Law/Necromancy, won by charnel_mouse | 0.52 | 0.96 |

| XCAFRR19 R3 bolyarich [Feral/Growth]/Disease vs. charnel_mouse [Balance/Growth]/Disease, won by charnel_mouse | 0.52 | 0.95 |

| XCAFRR19 R4 EricF [Fire]/Disease/Truth vs. bolyarich MonoBlack, won by bolyarich | 0.47 | 0.94 |

| XCAFRR19 R5 codexnewb [Future]/Anarchy/Peace vs. OffKilter [Fire]/Growth/Present, won by OffKilter | 0.46 | 0.93 |

| XCAFRR19 R1 UrbanVelvet [Anarchy]/Past/Strength vs. CarpeGuitarrem Nightmare, won by UrbanVelvet | 0.54 | 0.93 |

| XCAFRR19 R2 codexnewb Nightmare vs. dwarddd MonoPurple, won by codexnewb | 0.54 | 0.92 |

| XCAFRR19 R5 Leaky MonoPurple vs. CarpeGuitarrem [Demonology]/Growth/Strength, won by CarpeGuitarrem | 0.55 | 0.91 |

XCAFFR19 upsets

| match name | result probability | fairness |

|---|---|---|

| XCAFRR19 R7 codexnewb MonoPurple vs. zhavier [Anarchy]/Past/Strength, won by codexnewb | 0.36 | 0.73 |

| XCAFRR19 R6 zhavier [Anarchy]/Past/Strength vs. FrozenStorm MonoPurple, won by FrozenStorm | 0.40 | 0.80 |

| XCAFRR19 R3 codexnewb [Future]/Anarchy/Peace vs. Leaky [Discipline]/Disease/Law, won by Leaky | 0.41 | 0.81 |

| XCAFRR19 R5 codexnewb [Future]/Anarchy/Peace vs. OffKilter [Fire]/Growth/Present, won by OffKilter | 0.46 | 0.93 |

| XCAFRR19 R4 EricF [Fire]/Disease/Truth vs. bolyarich MonoBlack, won by bolyarich | 0.47 | 0.94 |

| XCAFRR19 R4 Nekoatl [Balance/Growth]/Disease vs. James [Disease/Necromancy]/Law, won by Nekoatl | 0.49 | 0.99 |

| XCAFRR19 R1 FrozenStorm [Discipline]/Past/Peace vs. codexnewb [Future]/Anarchy/Peace, won by codexnewb | 0.50 | 1.00 |

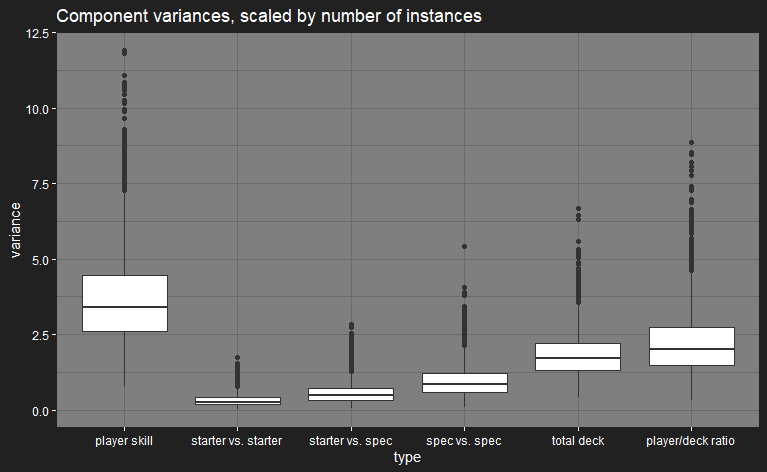

Here’s something new: the model estimates the variance in player skills and opposed deck components, in terms of their effect on the matchup. This means that I can directly compare the effect different things have on a matchup:

The boxes are scaled according to how many elements of that type go into each matchup: 2 players, 1 starter vs. starter, 6 starter vs. spec, 9 spec vs. spec. On average, the players’ skill levels have twice the effect on the matchup that the decks do.

Roughly speaking, that means that, if we took the multi-colour decks with the most lopsided matchup in the model, and gave the weak deck to an extremely strong player, e.g. EricF, and gave the strong deck to an average-skill player, e.g. codexnewb (average by forum-player standards, remember), we’d expect the matchup to be roughly even.

As always, comments or criticism about the results above are welcome. Let me know if there are particular games you’d like the results for too, although those should be available to view on the new site soon.

I’m in the process of making a small personal website, so I can stick the model results somewhere where I have more presentation format options. In particular, I look at the model’s match predictions using JavaScript-style DataTables, where you can sort and search on different fields (match name, model used, match fairness, etc.), so it would be nice if other people could use them too.

It will also let me more easily make the inner model workings more transparent. When I get time, I’m planning to add ways to examine cases of interest, like the Nash equilibrium among a given set of decks, or a way to view the chances of different decks against a given opposing deck and with given players. The latter, in particular, would be useful to let other people evaluate the model for matches that they’re playing.

I have other versions of the model that use Metalize’s data, but I’m going to delay showing those until I’ve finished putting up the site, and have tidied up the data a bit.

OK, it’s pretty rough, but I now have a first site version up here. I had some grappling with DataTables and Hugo to do, so I should be able to add things more easily after this first version.

Things I’m planning to add first:

- Results for other versions of the model, to show how it’s improved since the start of the project.

- A match data CSV, and R/Stan code.

- Downloadable simulation results data for people to play with, if anyone’s interested.

- Interactive plots. I’d like to have something to put in players and decks and see the predicted outcome distribution, like vengefulpickle’s Yomi page, instead of having to fiddle with column filters on the DataTable in the Opposed component effects section.

- Tighten up the wording to make it more clear to people who haven’t been following the thread.

I’ll still do update posts here about model improvements, interesting results etc.; the site’s there for people to play around with the model themselves.

Oh, and I’ve developed things to the point where I can easily plug in players/decks in a tournament and get matchup predictions. I’ll post up the predictions for CAWS19 matchups after it’s finished – I don’t want to prime anyone (else) by posting them beforehand – and take a look at how good they were.

Next tournament, my aim is to let the model tell me which deck to use. Not to play to win, more to find the model’s flaws.

The version on the site now has a section for Nash equilibria: against an evenly-matched opponent with double-blind deck picks, how should you best randomly decide to deck to pick? I’ve currently done for this for monocolour decks, and for all multicolour decks used in a recorded game. Doing this for all possible multicolour in the current way I do this would currently require me to have a several hundred Gb of RAM, I’m working on a version that doesn’t.

There are results for the usual case of not knowing who’ll go first / alternating turn order in a series, and for the case where you know before picks whether you’re going first or second.

A lot of the results – which I will try to make more intelligible soon – come down to “the model isn’t very certain about anything.” Go to the site if you want to stare at a bunch of tables. Here’s a few highlights, though:

- For monocolour games, if you’re going first, half the time you’d take Black or Red. If you’re going second, half the time you’d take Black or sometimes Purple. If you don’t know or are alternating turn order, most of the time you’d take Black. All six colours are still present in the picks in all three cases, so still some uncertainty here.

- For multicolour games with unknown/alternating turn order, the most favoured deck of those recorded is [Necromancy]/Finesse/Strength, but that’s only picked about 4% of the time, so there’s no dominating strategy. In fact, of the 105 decks in recorded matches, all of them have a non-zero chance to be picked. There’s still a lot of uncertainty here, perhaps made most obvious by the fact that there are two decks with Bashing in the ten highest-weighted decks: [Bashing]/Demonology/Necromancy (#2, 3%) and [Bashing]/Fire/Peace (#7, 2%). Compare to MonoBlack (#21, 1.3%) and Nightmare (#30, 1.1%).

- In the recorded multicolour case, for unknown/alterating turn order, MonoBlack is the highest-weighted monocolour deck, at 1.3% of total weight.

- MonoGreen generally has the lowest weight in both monocolour and multicolour. Yes, lower than MonoBlue.

- Nash equilibria in both cases are slightly in favour of Player 1, so slightly that I don’t think it matters much.

I don’t know who was playing Bashing/Fire/Peace, but the #2 deck is being skewed by having a 100% win rate and only getting played in one event.

I know you’re doing something to tease out the player vs deck contributions, but intuitively I would expect a deck with this profile:

Player Average Win % = 55%

Player + Deck Average Win % = 60%

to be a better choice than this profile:

Player Average Win % = 85%

Player + Deck Average Win % = 100%