EDIT: given there has been some discussion about rankings on this forum before, e.g. discussion here on ranking individual specs, I should mention two things for what I’m planning for later analyses.

- I’m not trying to make objective rankings here. What I would like to do is to get a rough set of rankings, good spec pairings etc., and compare them against general opinions to see where they agree and where they don’t.

- I’ll be trying to accont for interactions, e.g. players being better with certain setups, or certain specs pairing together well. Also, some idea of individual matchups, where effectiveness depends on the other player’s specs.

I also threw together a quick analysis, some initial results are below. These should be taken with a large piece of salt:

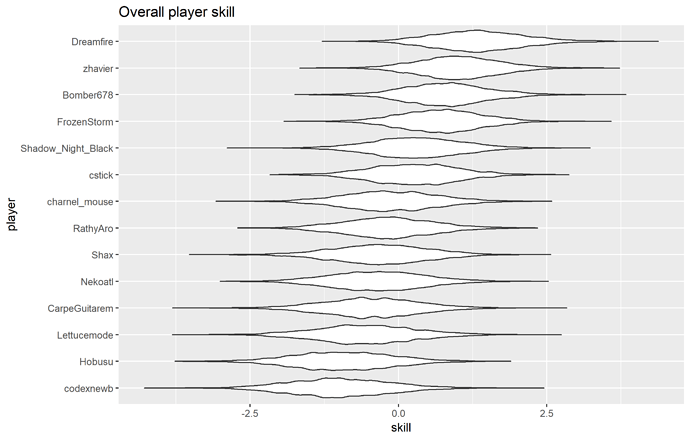

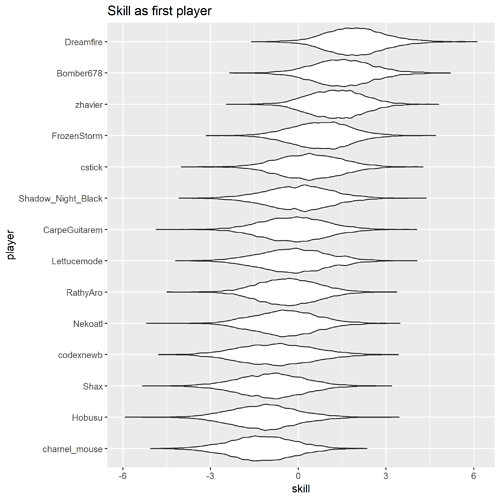

- Currently I only accountfor general first-player advantage, player skill, and player order effect on a player’s skill (i.e. whether they’re better or worse when going first). There’s currently no accounting for decks, so if a player is shown below as being better/worse, that’s really referring to the performance of that player when using their chosen deck, rather than that player in general.

- I’ve only compiled match data from CAMS 2018 so far.

I ignore matches that ended in a timeout.

Once I’ve got data from more tournaments, performance of decks and players can be teased out, and results should get more interesting. Still, hopefully the results below give an idea of what I’ll be doing.

Model details for stats nerds

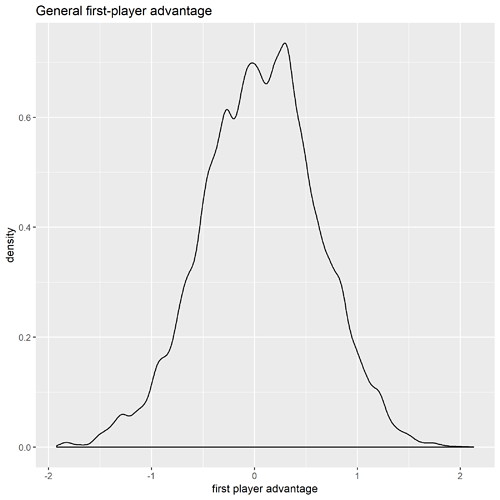

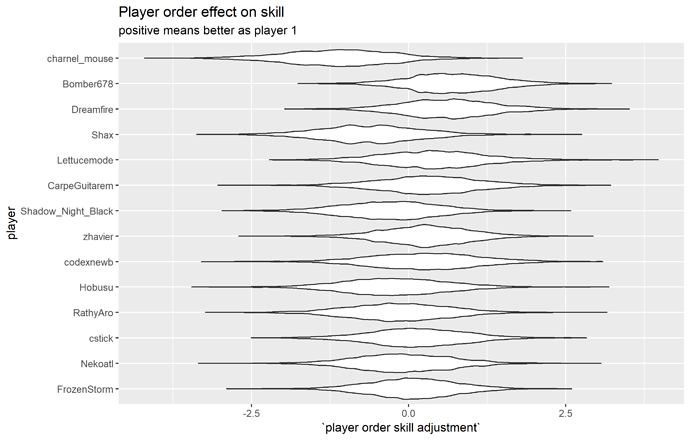

This is effectively a logistic regression model: player skill for each player, average effect of player order, and their interaction for each player have a linear effect on the log odds of winning a match. For each player, the skill effect of going second is the opposite of going first, so their generall skill coefficient is in the middle, keeping the main effects easily interpretable.

Priors: all coefficient have an independent simple normal prior.

Even without accounting for chosen specs / starter decks, this model is a pain to do exact analysis for, so I ran an MCMC chain for 10000 interations. Each proposal added a N(0,1) value to each model coefficient. Convergence was immediate, so no mucking around with burn-in times here. That’s a pretty short chain, but all the marginal coefficient densities look roughly normally distributed, so I reckon it’s good enough. Analysis was done in R, MCMC code was from scratch. I switch over to Stan once I’ve added deck effects.

Players going first tended to do better, but not by much. First-player advantage is dwarfed by player effects.

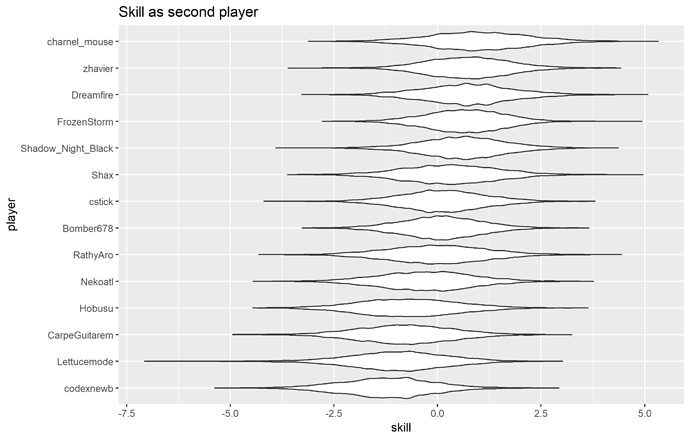

Player skill matches up pretty closely with final tournament results. Main exception I can see is Bomber. Lower-ranked players have a wider skill uncertainty interval, because they had less games.

Ranking high on this one means that you had much better performance as player 1 than as player 2, or vice versa. Probably better to be lower-ranked here. I remember losing every time I was player 1 and winning every time I was player 2, since my codex couldn’t do early aggression, so it seems reasonable I’m ranked highest here.

I swear I didn’t set the latter one up on purpose.